Key Takeaways

- Data breaches are a major concern with AI journaling tools due to potential security vulnerabilities.

- AI journaling platforms may also use your entries to train their AI models or build user profiles, raising privacy concerns.

- Be cautious of AI misinterpreting entries and providing misguided advice or focusing on irrelevant details.

While AI chatbots like ChatGPT and Gemini are making waves, it’s easy to forget that heaps of other tools are using AI, too. The latest set of apps to pick up AI tech are online journaling tools, which allow you to write and sift through your thoughts with a dedicated AI assistant.

While these tools offer convenience, they’re not always safe. If you’re signing up for an AI journaling service, don’t ignore the risks they come with.

1 Potential Data Breaches

Data breaches are a concern when using any online tool, and this risk only increases with lesser-known AI journaling tools. You don’t know whether the companies offering these tools have sufficient security measures in place, which means a data leak or hack could result in your details becoming publicly available.

Even if you decide to use an AI tool for journaling purposes, be sure not to share sensitive information or personal details in your entries. And if you suspect that your information may have leaked, I recommend checking for a data leak immediately.

2 Use of Journal Entries for AI Training

Did you know even high-profile companies like LinkedIn and Facebook are using your content to train their AI systems? This isn’t uncommon, and using your data for AI training is how these models become better. It’s safe to say that at least a few AI journaling platforms use the same strategy, meaning your innermost thoughts and reflections are being used and analyzed to improve the chatbot’s responses.

Now, some people may be okay with this practice, but I sure don’t want my personal entries to become part of a training dataset. Regardless of where you stand on this matter, be sure to check the company’s privacy policy and terms of service before you start using its AI journaling tools. Some companies let you opt out of having your data used for AI training, so that’s something to consider if you’re going to continue using the tool.

3 Misinterpretation of Input

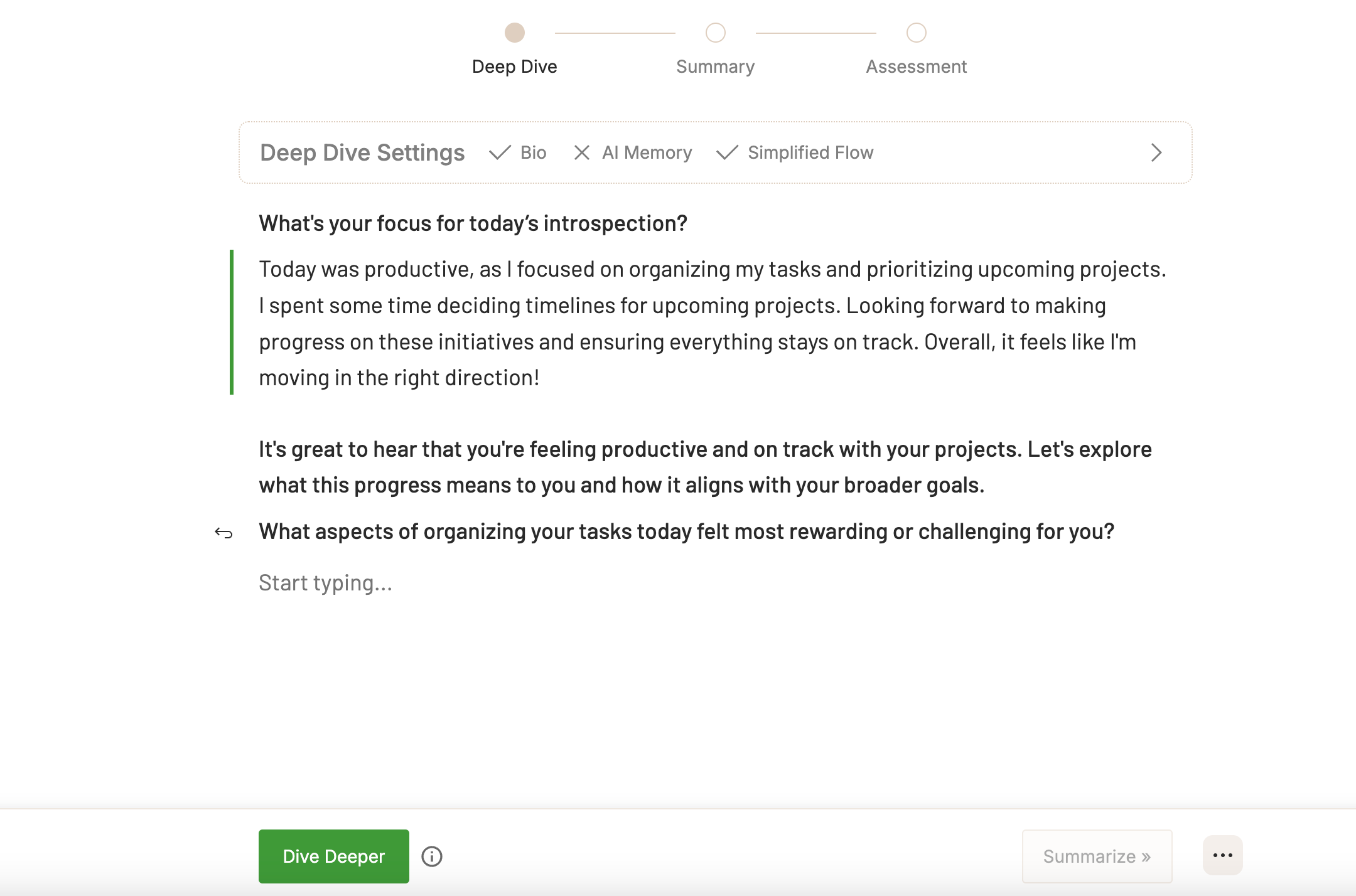

I am not saying AI journaling tools aren’t useful. In fact, Deepwander, an AI journaling tool I tried, would analyze my entry and ask follow-up questions to help me reflect further. While this is undoubtedly helpful, it’s important to remember that you’re dealing with an AI model at the end of the day.

The advanced AI models we have today might not hallucinate as much as they did in the past, but they can still misinterpret your entries and give you misguided advice or have you focus on irrelevant details.

Keep in mind that the AI model isn’t aware of your background and the nuances that go into making a well-thought-out decision, so it may overlook important aspects of your situation and steer you in the wrong direction. So, as there are dangers to using AI as your free therapist, there are also risks with relying too heavily on an AI model’s prompts and questions while journaling.

4 The Platform Might Be Profiling Users

Your journal entries and the topics you dive into allow AI companies to build a comprehensive user profile. While this is intended to help the AI model provide more accurate and helpful responses, it’s also a security risk, given that a hack could reveal personal details about you.

Additionally, there is also a chance that your profile might get sold to advertisers who could use this information to serve you targeted ads. Although this is invasive, some companies get away with these practices because they include it in their fine print, which, of course, is often overlooked by users.

AI journaling tools have their benefits. But if you’d rather not put your personal information at risk, consider using a traditional journal or offline writing tool to pen your thoughts.