I’m really excited about the release of Apple Intelligence, but I believe there’s plenty of room for improvement. Apple could add several features to make it even better. Here are my ideas on what could take Apple’s suite of AI features to the next level.

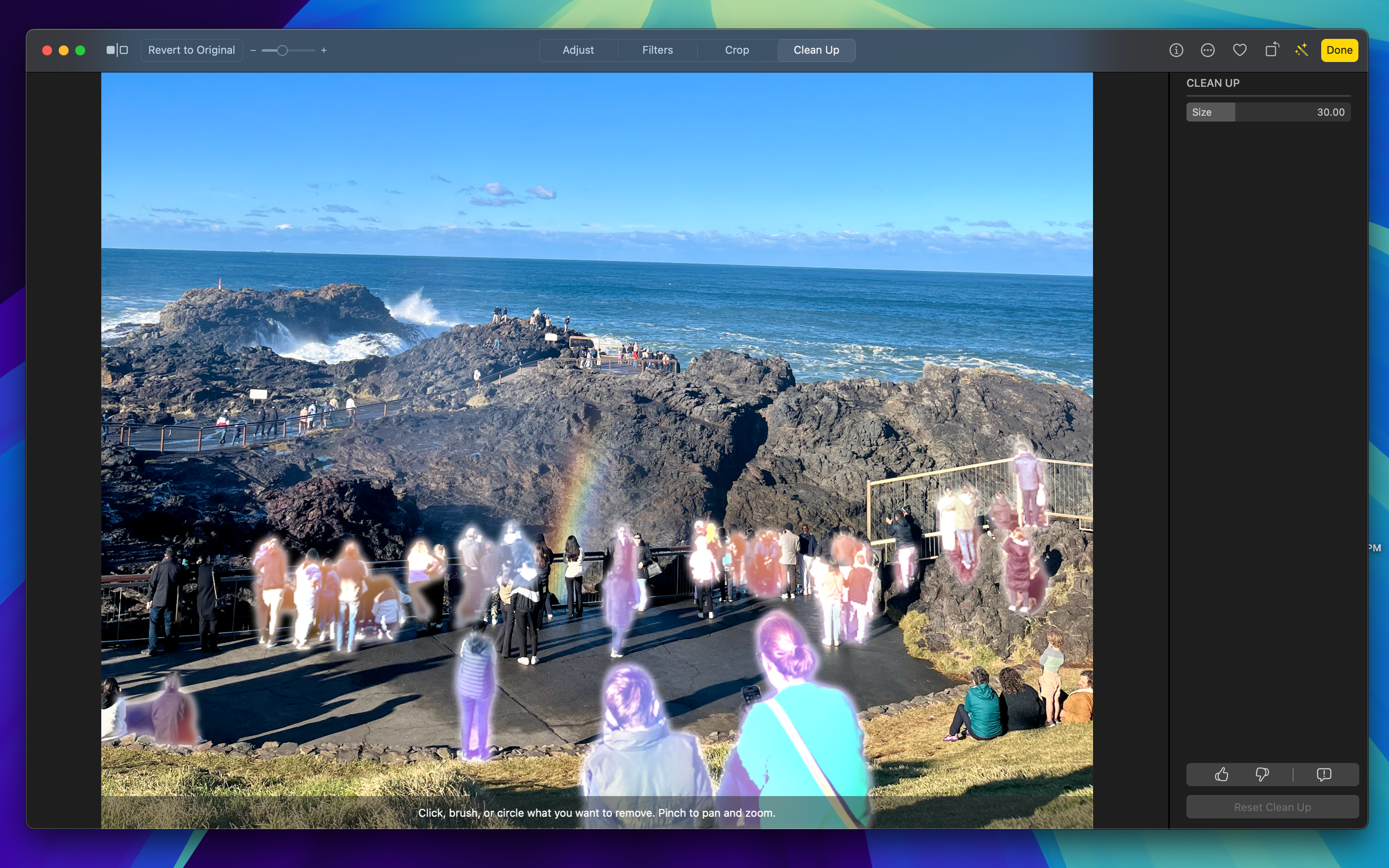

Apple Intelligence offers just one major image-editing feature called Clean Up, which is similar to Google’s Magic Eraser, allowing you to remove unwanted objects from photos. While it’s a welcome addition, it doesn’t feel groundbreaking, especially since Google and Samsung have offered similar tools for quite a while now.

Beyond Clean Up, Apple Intelligence offers very little in terms of photo editing tools. In contrast, the Google Pixel 9 has many amazing AI features, like Add Me, which ensures everyone is included in group photos, or Reimagine, which lets you replace parts of an image just by describing it with a text prompt. It would be great if Apple could take inspiration from Google and introduce similar features.

As someone who isn’t very skilled at editing photos, I’d love a feature that lets me generate filters based on a text prompt. I could describe which colors I want to stand out more or the kind of vibe I’m aiming for, and the AI model would create a filter to match that description.

2 More Realistic Image Generation

Apple also introduced a new app called Image Playground as part of Apple Intelligence, allowing users to generate images from a text prompt in three different art styles: Animation, Illustration, and Sketch. It integrates smoothly with apps like Messages and even third-party platforms. While the implementation is well done, I’m not a fan of the results.

The art styles feel too cartoonish for my taste, and I can’t see myself using Image Playground to create images and send them to friends or family. Although the model works well for Genmojis, which lets you create completely new personalized emojis via a text prompt, there should be more realistic art styles available.

One possible reason for this is that the image diffusion model runs on-device for better privacy. However, I wouldn’t mind a more realistic image generation model that runs on Apple’s Private Cloud Compute to handle the higher computational demands, which also deletes all your data after processing your requests.

3 Call Screening

One of my favorite features on the Google Pixel is call screening, where Google Assistant answers calls for you and provides a live transcript, helping you decide whether to take the call. It can even pick up calls from unknown numbers for you, and if it detects that it’s a robocall or a spam call, Google Assistant will automatically hang up the call without ever bothering you.

It would be great if Siri could do something similar and generate automatic replies based on context. For example, if your iPhone knows you’re out, Siri could automatically ask a delivery person to leave the package at your door.

Unfortunately, Apple Intelligence is currently limited to generating a transcript and providing a summary of a phone call, but this is an area Apple should consider expanding into.

4 Better Live Translation Features

While you can use the built-in Translate app for basic tasks, like typing text and having it read aloud in another language, I can’t help but feel Apple Intelligence could contribute much more.

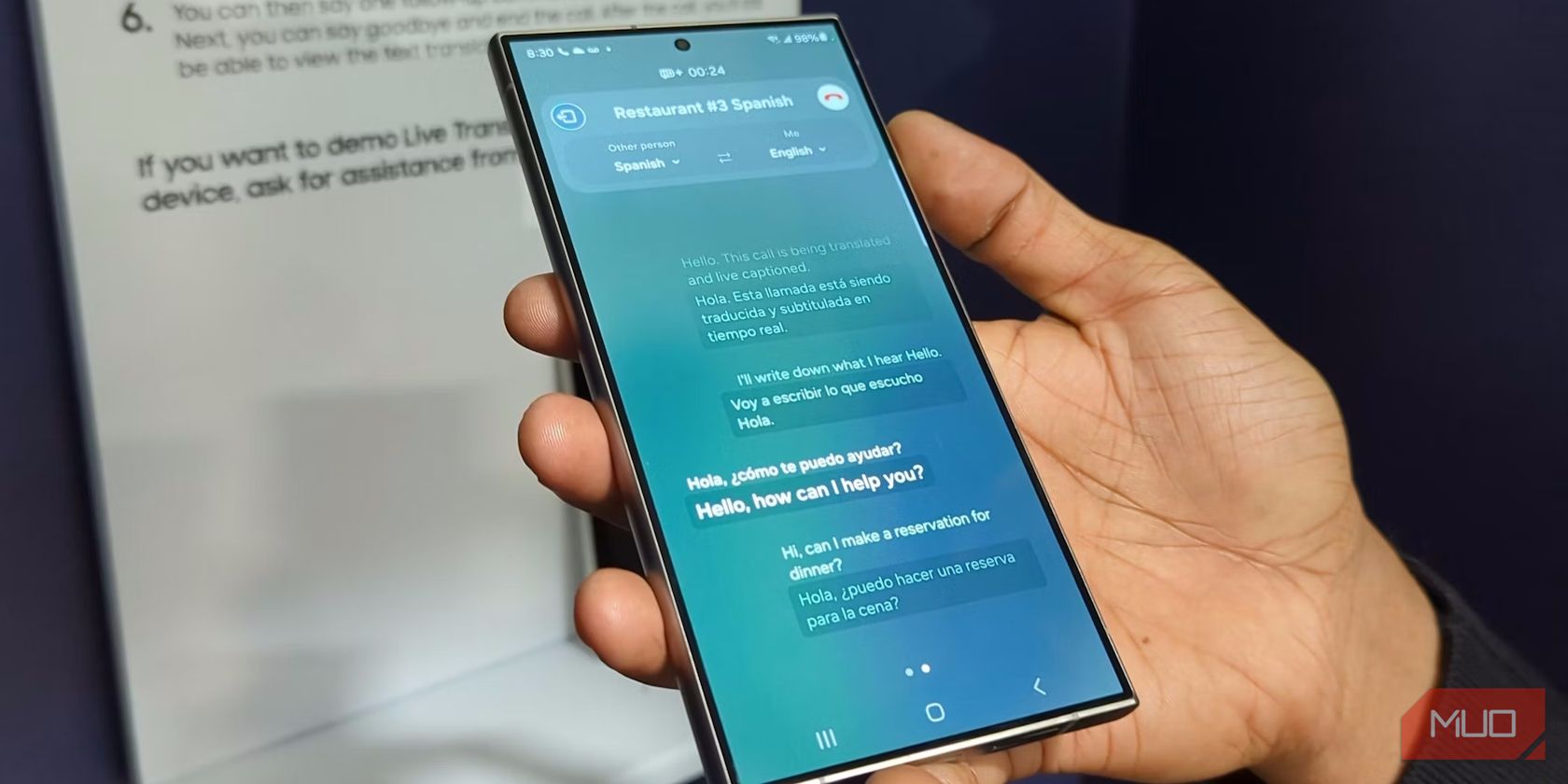

What I’d really like to see are real-time translation tools that work system-wide. A great example is Samsung’s Live Translate, which can transcribe and translate conversations in real time during phone calls. Google also offers similar features that work seamlessly across multiple apps, with all the processing happening on-device.

Since both Samsung and Google have already implemented this, and their models run efficiently on-device, I don’t see why Apple hasn’t focused on translation features with Apple Intelligence.

5 The Option to Choose Your Third-Party LLM

Although Siri is getting a major upgrade with features like on-screen awareness, it still might not handle every request. To fill those gaps, it uses ChatGPT as a fallback to generate responses or answer questions about photos or documents.

While ChatGPT is great, I wish I could choose which third-party LLM I want to use, similar to how you can change your default search engine. We’ve already seen ChatGPT alternatives that excel at specific tasks. It would be even better if users could set preferences for different tasks; for example, automatically using Claude for image-related questions but switching to Gemini or ChatGPT for text generation.

So, these are the features I’d love to see on Apple Intelligence. That said, there’s still plenty to look forward to as we see how Apple’s AI suite stacks up against Google’s and Samsung’s offerings. Although not publicly available, you can try Apple Intelligence in the iOS 18.1 and macOS 15.1 betas. Just remember that your experience may not be entirely stable, as these are still early experimental builds.